What Does This Key Open? - Docker Container Images (4/4)

This post is the fourth part of a series on our recent adventures into DockerHub. Before you read it, you may be interested in the previous instalments, which are:

- "I don't need no zero-dayz" - Docker Container Images (1/4)

- Layer Cake: How Docker Handles Filesystem Access (2/4)

- Learning To Crawl (For DockerHub Enthusiasts, Not Toddlers) (3/4)

-

If you recall our previous posts, so far we've achieved quite a lot - we've found a way to fetch a large amount of data from DockerHub efficiently, worked out how to store it in a proper relational database, and ended up with over 30 million files which we now need to scan for sensitive information.

This is no easy task at this scale. In this post, we'll talk about how we went about this task, mutating open-source software to build our own secret discovery engine. We'll then talk about the thousands of secrets that we found, and share some insights into exactly where the secrets were exposed - because well, it seems everyone does this.

As you will likely gather throughout this exercise, we began to conclude that our findings were limited only by our imagination.

For anyone wondering - no, we won't be sharing actual secrets in this blogpost.

Leveraging Gitleaks

Our secret discovery engine is based on the open-source tool Gitleaks. This provides a great basis for our work - it's designed to run on developer workstations, and integrate with Git to scan source code for secret tokens a developer may otherwise inadvertently commit to a shared source control system. It can also operate on flat files, without Git integration.

It has some neat features - for example, it is able to recognise the structure of many access tokens (such as AWS access keys, which always begin with four characters specifying their type). It also measures the entropy of potential secrets, which is useful to find secure secrets that have been generated randomly while ignoring false positives such as '1111111'.

However, it is clear that the software was not designed with our specific scenario (batch scanning of millions of files) in mind.

We ran into some trivial problems when first exploring - for example, invoking Gitleaks with a commandline similar to the following:

$ gitleaks detect --no-git --source /files/file1 --source /files/file2While I expected this command to scan both files specified, the actual behaviour was to scan only the final file specified, ignoring the first! Fortunately, Gitleaks is open source, and so modifying it within our architecture handle collections of files, archives and more wasn't a problem.

A second problem, this time impacting performance, rears its head when Gitleaks detects with a large amount of secrets. Gitleaks will keep a slice and append detected secrets to it, but for very large amounts of secrets, this approach causes high CPU load and memory fragmentation, so it is best to preallocate this array.

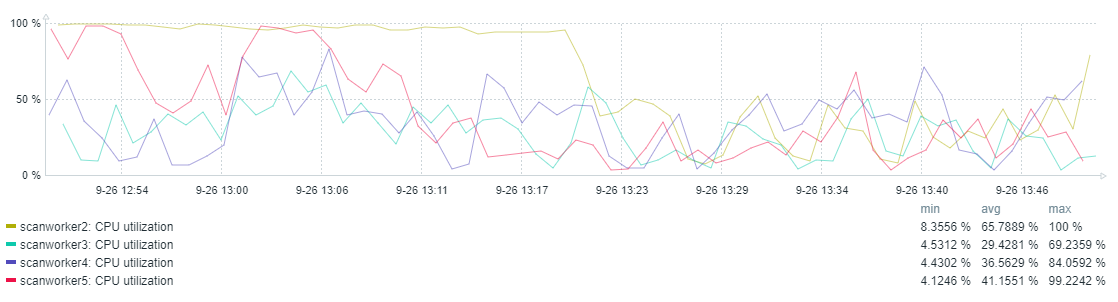

With these tweaks, we're ready to use our mutated Gitleaks-based engine to find secrets. We created a table in the database for holding results for our analysis, and used a number of ec2 nodes to fill it.

Falsely Positive

Of course, when dealing with a fileset of this size, false positives are an inevitability. These fall into two main categories.

Firstly, tokens which are clearly not intended for production use. For example, the python crypto package ships with tests which use static keys. These keys are, for our purposes, 'well known', and should not be included in our output. While it is tempting to ignore these completely, we store them in the database, but mark them as 'well known'. This allows us to build a dictionary of 'well known' secrets for future projects.

Secondly, various items of text will be detected by Gitleaks despite being clearly not access keys. For example, consider the following C code:

unsigned char* key = "ASCENDING";Gitleaks ships with a module that would classify this as a secret token, since it is assigned to a value named key.

We decided to mitigate both of these categories using filters on the name of the file in question. While Gitleaks includes functionality to do this, our use of a database and the one-to-many relationship between files and filenames complicates things, and so we use Python to match filenames after scanning.

Filename Ignore-Listing

Since a large amount of files are being ignored, and because we wanted fine-grained control over them, we were careful when designing the system that assesses if a given filename was 'well known' or not.

We decided on a file format for holding regular expressions with entries similar to the following:

-

pattern: .*/vendor/lcobucci/jwt/test/unit/Signer/RsaTest\.php

mustmatch:

- /var/www/html/vendor/lcobucci/jwt/test/unit/Signer/RsaTest.phpHere, we can see a pattern which is matched, and we can also see a test string, in the 'mustmatch' array. Before analysis is started, the regular expression is tested against this test string, and if no match is found, an error is found. While not helpful for simple expressions like the one shown, this is invaluable when attempting more complex patterns:

-

pattern: .*?/(lib(64)?/python[0-9\.]*/(site|dist)-packages/|.*\.egg/)Cryptodome/SelfTest/(Cipher|PublicKey|Signature)(/__pycache__)?/(test_pkcs1_15|test_import_RSA|test_import_ECC|test_pss)(\.cpython-[0-9]*)?\.(pyc|py)

mustmatch:

- /usr/local/lib/python2.7/site-packages/Cryptodome/SelfTest/Cipher/test_pkcs1_15.pyc

- /usr/local/lib/python2.7/site-packages/Cryptodome/SelfTest/PublicKey/test_import_RSA.pyc

- /galaxy_venv/lib/python2.7/site-packages/Cryptodome/SelfTest/PublicKey/test_import_ECC.pyc

- /usr/local/lib/python2.7/site-packages/Cryptodome/SelfTest/Signature/test_pkcs1_15.pyc

- /usr/local/lib/python2.7/site-packages/Cryptodome/SelfTest/Signature/test_pss.py

- /usr/local/lib/python3.7/site-packages/Cryptodome/SelfTest/Cipher/__pycache__/test_pkcs1_15.cpython-37.pyc

- /usr/local/lib/python3.6/dist-packages/pycryptodomex-3.9.8-py3.6-linux-x86_64.egg/Cryptodome/SelfTest/Signature/test_pss.py

In addition, separate tests ensure 'true positive' detections occur, by scanning known-interesting files and ensuring that a detection is raised appropriately.

Context-Aware Ignore-Listing

While filename-based ignoring is a great help, there are a few specific instances where it falls short. For example, text similar to the following appears often in dmesg output:

[ 0.630554] Loaded X.509 cert 'Magrathea: Glacier signing key: 00a5a65759de474bc5c43120880c1b94a539f431'Gitleaks will helpfully alert us of this, believing the key to be sensitive information. In reality, it is simply a fingerprint, and useless to an attacker. Since there are a lot of keys in use, it is impractical to list all that we are not interested in, and so we use a regex to isolate the secret and ensure it does not occur in these strings:

regex:

-

pattern: ".*Loaded( X\\.509)? cert '.*: (?P<secret>.*)'"

mustmatch:

- "Mar 08 12:58:16 localhost kernel: Loaded X.509 cert 'Red Hat Enterprise Linux kpatch signing key: 4d38fd864ebe18c5f0b72e3852e2014c3a676fc8'"

- "MODSIGN: Loaded cert 'Oracle America, Inc.: Ksplice Kernel Module Signing Key: 09010ebef5545fa7c54b626ef518e077b5b1ee4c'"

Note the use of a named capture group - named 'secret' - which is compared to the secret that Gitleaks detects. If this expression matches, the detection is considered 'well known'.

SSH Keypairs

One datapoint with a low false-positive rate and high impact is the number of SSH private keys found in publically accessible DockerHub repositories. Since our mutated engine is able to recognise these, it is easy to identify them (although some work must be done to remove example keys).

We locate a very large number of these files - 54169, to be exact:

+-----------------------------------+----------+-------------------------+

| description | count(*) | count(distinct(secret)) |

+-----------------------------------+----------+-------------------------+

| Private Key | 54169 | 9693 |

+-----------------------------------+----------+-------------------------+

But many of them are of no consequence. Taking a look at the filenames, for example, we can immediately discard 2329 self-signed CA files:

mysql> select filename, count(*) from gitleaksArtifacts \

where Description = "Private Key" \

group by filename \

order by count(*) desc \

limit 10;

+-----------------------+----------+

| filename | count(*) |

+-----------------------+----------+

| rsa.2028.priv | 2745 |

| ssl-cert-snakeoil.key | 2329 |

| server.key | 1972 |

| http_signing.md | 1488 |

| pass2.dsa.1024.priv | 1350 |

| ssh_host_rsa_key | 1219 |

| ssh_host_dsa_key | 1063 |

| ssh_host_ecdsa_key | 1016 |

| ssh_host_ed25519_key | 897 |

| server1.key | 805 |

+-----------------------+----------+

The presence of files prefixed with ssh_host, however, is interesting, as such host keys are considered sensitive. Let's look at them more closely:

mysql> select filename, count(*) from gitleaksArtifacts \

where Description = "Private Key" \

and filename like 'ssh_host_%_key' \

group by filename \

order by count(*);

+-----------------------+----------+

| filename | count(*) |

+-----------------------+----------+

| ssh_host_ecdsa521_key | 1 |

| ssh_host_ecdsa256_key | 1 |

| ssh_host_ecdsa384_key | 1 |

| ssh_host_ed25519_key | 897 |

| ssh_host_ecdsa_key | 1016 |

| ssh_host_dsa_key | 1063 |

| ssh_host_rsa_key | 1219 |

+-----------------------+----------+

These 4198 files, spanning 611 distinct images, should probably not be exposed to the public. However, we can actually take things one step further, and verify if any hosts on the public Internet are using these keys.

Enter: Shodan

You may be familiar with Shodan.io, which gathers a wealth of banner information from various services exposed to the public Internet. One feature is provides is the ability to search by fingerprint hash, which we can calculate easily from the private keys we fetched above.

Shodan provides a straightforward API, so our key-locating application is simple to write. Most of the code is in manipulating private keys to find the corresponding public key, and thus the hash.

shodanInst = shodan.Shodan('<your API key here')

for artifact in self.db.fetchGitleaksArtifacts():

if artifact.description == 'Private Key':

try:

# Convert this into a public key and get the hash.

keyAsc = artifact.secret

keyAsc = re.sub( re.compile("\s*?-*(BEGIN|END).*?PRIVATE KEY-*\s*?"), "", keyAsc)

keyAsc = keyAsc.replace('"', "")

keyBinary = base64.b64decode(keyAsc)

keyPriv = RSA.importKey(keyBinary)

except binascii.Error:

continue

except ValueError:

continue

keyPublic = keyPriv.publickey()

# Export to OpenSSH format, snip off the prefix, and b64-decode it

pubKeyBytes = base64.b64decode(

keyPublic.publickey().exportKey('OpenSSH').split(b' ')[1]

)

# We can then hash this value to find the key hash.

hasher = hashlib.md5()

hasher.update(pubKeyBytes)

pubHash = hasher.digest()

pubHashStr = ":".join(map(lambda x: f"{x:02x}", pubHash))

# Now we can do the actual search.

shodanQuery = f'Fingerprint: {pubHashStr}'

result = shodanInst.search(shodanQuery)

for svc in result['matches']:

db.insertArtifactSighting(

artifact.fileid,

str(ipaddress.ip_address(svc['ip']))

)

This query yields very interesting results, although not what we expect. We see 742 matches, over 690 unique IP addresses, but what is most interesting is the specific keys they use:

mysql> select concat(artifact_path.path, '/', artifacts.filename), count(distinct(ipaddress)) from artifactsOnline join artifacts on artifacts.id = artifactsOnline.fileid join artifact_path on artifact_path.id = artifacts.pathid join artifactHashes on artifactHashes.id = artifacts.hashID where path not like '/pentest/exploitation%' group by concat(artifact_path.path, '/', artifacts.filename) order by count(distinct(ipaddress));

+-------------------------------------------------------------------------------------------+----------------------------+

| concat(artifact_path.path, '/', artifacts.filename) | count(distinct(ipaddress)) |

+-------------------------------------------------------------------------------------------+----------------------------+

| /etc/ssh/ssh_host_rsa_key | 27 |

| /usr/local/lib/python2.6/dist-packages/bzrlib/tests/stub_sftp.pyc | 23 |

| /usr/lib64/python2.7/site-packages/bzrlib/tests/stub_sftp.pyo | 23 |

| /usr/lib/python2.7/site-packages/bzrlib/tests/stub_sftp.py | 23 |

| /usr/lib/python2.7/site-packages/bzrlib/tests/stub_sftp.pyc | 23 |

| /usr/lib64/python2.7/site-packages/bzrlib/tests/stub_sftp.pyc | 23 |

| /usr/lib/python2.7/site-packages/twisted/conch/manhole_ssh.pyc | 6 |

| /usr/share/doc/python-twisted-conch/howto/conch_client.html | 6 |

| /usr/local/lib/python2.7/site-packages/twisted/conch/manhole_ssh.pyc | 6 |

| /usr/local/lib/python2.7/dist-packages/twisted/conch/test/keydata.py | 6 |

| /usr/local/lib/python2.7/dist-packages/twisted/conch/manhole_ssh.pyc | 6 |

| /usr/lib/python2.7/dist-packages/twisted/conch/test/keydata.py | 6 |

| /usr/lib/python2.7/dist-packages/twisted/conch/manhole_ssh.pyc | 6 |

| /usr/lib/python2.7/dist-packages/twisted/conch/manhole_ssh.py | 6 |

| /root/.local/lib/python2.7/site-packages/twisted/conch/manhole_ssh.pyc | 6 |

| /usr/local/lib/node_modules/piriku/node_modules/ssh2/test/fixtures/ssh_host_rsa_key | 4 |

| /usr/local/lib/node_modules/strongloop/node_modules/ssh2/test/fixtures/ssh_host_rsa_key | 4 |

| /app/code/env/lib/python3.8/site-packages/synapse/util/__pycache__/manhole.cpython-38.pyc | 2 |

| /app/code/env/lib/python3.8/site-packages/synapse/util/manhole.py | 2 |

| /tmp/mtgolang/src/github.com/mtgolang/ssh2docker/cmd/ssh2docker/main.go | 1 |

+-------------------------------------------------------------------------------------------+----------------------------+It seems that a large amount of hosts are using example keys as shipped with various Python packages. This is an interesting finding in itself, and something we may examine in a later blog post.

We can also see the host key for a tomcat installation being used on 82 individual docker images - that's more like what we expected to see. This verifies that these keys are sensitive, and "double-confirms", as we say here in Singapore, that they should not be disclosed.

Social Media

Social media keys were also present in the dump. Let's take a look:

+----------------------------------+----------+-------------------------+

| description | count(*) | count(distinct(secret)) |

+----------------------------------+----------+-------------------------+

| Twilio API Key | 220 | 3 |

| Facebook | 27 | 12 |

| LinkedIn Client ID | 18 | 6 |

| LinkedIn Client secret | 16 | 6 |

| Twitter API Key | 9 | 6 |

| Twitter API Secret | 7 | 5 |

| Flickr Access Token | 3 | 2 |

| Twitter Access Secret | 1 | 1 |

+----------------------------------+----------+-------------------------+

Of the 27 Facebook keys, one appears to be invalid, consisting of all zeros. There are 11 unique keys which appear valid.

One of the Flickr access tokens is located in a file named /workspace/config.yml. This file contains (in addition to the detected Flickr token) what appear to be valid credentials to Amazon AWS, Instagram, Google Cloud Vision, and others.

Likewise, while examining the detections for Twitter secrets, we find only a few keys, but the files containing these keys also include AWS tokens, a SendGrid API keys, slack tokens, SalesForce tokens, and others.

mysql> select distinct(filename), count(*) from gitleaksArtifacts \

where description like 'Twitter%' \

group by filename \

order by count(*) desc;

+---------------------+----------+

| filename | count(*) |

+---------------------+----------+

| .env.example | 6 |

| settings.py | 3 |

| GenericFunctions.js | 2 |

| DVSA-template.yaml | 2 |

| development.env | 2 |

| twitter.md | 2 |

+---------------------+----------+It is very clear that keys and tokens often 'cluster' together in the same file.

Stripe Payment Processor Keys

The payment processor 'Stripe' has a lot of structure in its tokens, and so finding them is particularly easy. Our mutated engine located 88 unique secrets:

+----------------------------------+----------+-------------------------+

| description | count(*) | count(distinct(secret)) |

+----------------------------------+----------+-------------------------+

| Stripe | 1563 | 88 |

+----------------------------------+----------+-------------------------+

Here's the first three of them, to show just how much structure is present.

mysql> select concat(path, '/', filename), \

gitleaksArtifacts.secret from gitleaks_result \

join gitleaksArtifacts on \

gitleaksArtifacts.fileid = gitleaks_result.fileid \

where \

gitleaks_result.description = 'Stripe' \

and isWellKnown = 0 \

order by gitleaksArtifacts.hash \

limit 3;

+---------------------------------------------+-------------------------------------------------------------------------------------------------------------+

| concat(path, '/', filename) | secret |

+---------------------------------------------+-------------------------------------------------------------------------------------------------------------+

| /app/code/packages/server/stripeConfig.json | pk_live_51IvkOPLx4fyREDACTEDREDACTED |

| /app/code/packages/server/stripeConfig.json | pk_test_51IvkOPLx4fybOTqJetV23Y5S9REDACTEDREDACTED |

| /app/code/packages/server/stripeConfig.json | pk_test_51IvkOPLx4fybOTqJetV2REDACTEDREDACTED |

+---------------------------------------------+-------------------------------------------------------------------------------------------------------------+

3 rows in set (0.56 sec)

Note the leading pk_ or sk_, identifying a 'public key' (which is not sensitive) or a 'secret key', which is sensitive. Our dataset contains 47 unique private pk_ keys and 41 unique sk_ keys.

Of the 88 unique credentials we see, 35 appeared to be the secret key corresponding to test credentials (starting with the string sk_test), and six appeared to be 'live', starting with the string sk_live. Ouch!

Google Compute Platform (GCP) Keys

+----------------------------------+----------+-------------------------+

| description | count(*) | count(distinct(secret)) |

+----------------------------------+----------+-------------------------+

| GCP API key | 411 | 89 |

+----------------------------------+----------+-------------------------+

While we found a large amount of keys for the Google Compute Platform, it should be noted that it is difficult to ascertain the level of permission available to the keys. We estimate that a portion of these keys are intended for wide-scale public deployment; for example, those for crash-reporting, which are configured to allow anonymous uploads but little else.

Amazon Web Services (AWS) Keys

+----------------------------------+----------+-------------------------+

| description | count(*) | count(distinct(secret)) |

+----------------------------------+----------+-------------------------+

| AWS | 139362 | 42095 |

+----------------------------------+----------+-------------------------+

Again, it is difficult to ascertain the privileges associated with these forty-two thousand unique keys, and thus if they are truly sensitive or not.

Examining them individually does yield some context that helps, although it is not practical to do this at scale.

One thing that may interest readers is the location in which these keys were found. Three were located in the .bash_history file belonging to the superuser, indicating that these files had been improperly cleaned. Eleven other keys were found in improperly sanitized log files, and two in backups of MySQL database content.

Database Dumps

One of the custom detections we added to our mutated engine was to detect dumps of database information produced by the mysqldump tool. We applied this filter only to a quarter of the dataset, and found a large amount of these files - almost 400.

While some of them were installation templates, there were also some which contained live data:

mysql> select \

distinct(concat(artifact_path.path, '/', artifacts.filename)), \

artifacts.filesize \

from gitleaksArtifacts

join artifacts on artifacts.id = gitleaksArtifacts.fileid \

join artifact_path on artifact_path.id = artifacts.pathid \

where description = 'Possible database dump' \

order by filesize desc \

limit 25;

+---------------------------------------------------------------------------------+----------+

| (concat(artifact_path.path, '/', artifacts.filename)) | filesize |

+---------------------------------------------------------------------------------+----------+

| /docker-entrypoint-initdb.d/zmagento.sql | 72135471 |

| /docker-entrypoint-initdb.d/bitnami_mediawiki.sql | 10913302 |

| /app/wordpess.sql.bk | 5321931 |

| /var/www/html/sql/stackmagento.sql | 5224835 |

| /var/www/html/sql/stackmagento.sql | 4379391 |

| //ogAdmBd.sql | 1046438 |

| //all-db.ql | 918670 |

| /var/www/html/backup.sql | 703961 |

| /tmp/db.sql | 683875 |

| /var/www/html/cphalcon/tests/_data/schemas/mysql/mysql.dump.sql | 603179 |

| /opt/cphalcon-3.1.x/tests/_data/schemas/mysql/mysql.dump.sql | 603179 |

| /cphalcon/unit-tests/schemas/mysql/phalcon_test.sql | 597577 |

| /usr/local/src/cphalcon/unit-tests/schemas/mysql/phalcon_test.sql | 597577 |

| /usr/local/src/cphalcon/unit-tests/schemas/mysql/phalcon_test.sql | 596903 |

| //allykeys.sql | 423963 |

| /ECommerce-Java/zips.sql | 399288 |

| /app/code/database/setup.sql | 379063 |

| //dump.sql | 366901 |

| /tmp/hhvm/third-party/webscalesqlclient/mysql-5.6/mysql-test/r/mysqldump.result | 285948 |

| /home/pubsrv/mysql/mysql-test/r/mysqldump.result | 229737 |

| /usr/share/mysql-test/r/mysqldump.result | 223161 |

| /usr/share/zoneminder/db/zm_create.sql | 218651 |

| /home/SOC-Fall-2015/ApacheCMDA-Backend/DBDump/Dump20150414.sql | 191648 |

| /home/apache/ApacheCMDA/ApacheCMDA-Backend/DBDump/Dump20150414.sql | 191648 |

| /opt/mysql-backup/tmp/ubuntu-backup-2016-09-30-08-13-34.sql | 106985 |

+---------------------------------------------------------------------------------+----------+

25 rows in set (2.29 sec)Browsing some of these files, we spotted credentials for a variety of CMSs, and more.

And then just.. others..

Our mutated engine matched a large amount of other keys, and just in general - bad things(tm).

+----------------------------------+----------+-------------------------+

| description | count(*) | count(distinct(secret)) |

+----------------------------------+----------+-------------------------+

| Generic API Key | 941621 | 94533 |

| Database connection string | 32257 | 3797 |

| JSON Web Token | 1817 | 154 |

| Etsy Access Token | 506 | 12 |

| Linear Client Secret | 386 | 12 |

| Slack Webhook | 231 | 32 |

| Slack token | 120 | 22 |

| EasyPost test API token | 95 | 28 |

| EasyPost API token | 83 | 28 |

| Bitbucket Client ID | 27 | 3 |

| GitHub Personal Access Token | 16 | 2 |

| Airtable API Key | 16 | 5 |

| Dropbox API secret | 13 | 7 |

| Plaid Secret key | 12 | 2 |

| SendGrid API token | 12 | 7 |

| Sentry Access Token | 12 | 6 |

| GitHub App Token | 12 | 1 |

| Algolia API Key | 11 | 4 |

| Alibaba AccessKey ID | 7 | 3 |

| Plaid Client ID | 6 | 1 |

| HubSpot API Token | 6 | 4 |

| Heroku API Key | 6 | 1 |

| GitLab Personal Access Token | 6 | 3 |

| Mailgun private API token | 4 | 4 |

| SumoLogic Access ID | 4 | 2 |

| Lob API Key | 3 | 1 |

| Atlassian API token | 3 | 1 |

| Mailgun webhook signing key | 2 | 1 |

| Grafana service account token | 2 | 1 |

| Asana Client Secret | 2 | 2 |

| Grafana api key | 1 | 1 |

| Dynatrace API token | 1 | 1 |

+----------------------------------+----------+-------------------------+

Of most interest is the 'Generic API Key' rule. Unfortunately it is especially prone to false-positives, but due to its wide scope, it is able to detect secrets that other detection rules miss. For example, we found it located secrets in .bash_history files, and web access logs, to name a couple. For example, it found the following credit card information, which was thankfully non-sensitive since it had been sanitised:

[2016-05-02 18:43:07] main.DEBUG: TODOPAGO - MODEL PAYMENT - Response: {"StatusCode":-1,"StatusMessage":"APROBADA","AuthorizationKey":"<watchtowr redacted>","EncodingMethod":"XML","Payload":{"Answer":{"DATETIME":"2016-05-02T15:43:01Z","CURRENCYNAME":"Peso Argentino","PAYMENTMETHODNAME":"VISA","TICKETNUMBER":"12","AUTHORIZATIONCODE":"REDACTED","CARDNUMBERVISIBLE":"45079900XXREDACTED","BARCODE":"","OPERATIONID":"000000001","COUPONEXPDATE":"","COUPONSECEXPDATE":"","COUPONSUBSCRIBER":"","BARCODETYPE":"","ASSOCIATEDDOCUMENTATION":""},"Request":{"MERCHANT":"2658","OPERATIONID":"000000001","AMOUNT":"50.00","CURRENCYCODE":"32","AMOUNTBUYER":"50.00","BANKID":"11","PROMOTIONID":"2706"}}} {"is_exception":false} []Other files were found via inventive filename searches; a search for wallet.dat yields six files, for example.

At this point, it seems our discoveries are limited only by our imagination.

File Metadata

In addition to logging the contents and name of files, we also log the UNIX permission bits. This allows us to query for unusual or (deliberately?)-weak configurations. The following query will list all SUID files found on all systems, and all their paths, yielding 325 results:

mysql> select distinct(filename) \

from artifacts \

where ownerUID = 0 \

and perm_suid = '1' \

order by filename;The results are interesting, including a number of classic 90's-era security blunders. For example, some containers ship with world-writable SUID-root files (426 files in total):

mysql> select concat(path, '/', filename), hash \

from artifacts \

join artifact_path on artifact_path.id = artifacts.pathid \

join artifactHashes on artifactHashes.id = artifacts.hashid \

where perm_suid = '1' \

and perm_world_w = '1' \

order by filenameAlso present are various SUID-root shell scripts, which are notoriously difficult to secure:

mysql> select path,filename,hash \

from artifacts \

join artifact_path on artifact_path.id = artifacts.pathid \

join artifactHashes on artifactHashes.id = artifacts.hashID \

where perm_suid = '1' \

and perm_world_w = 1 \

and filename like '%.sh' \

limit 10;

+-----------------------------+----------------+------------------------------------------+

| path | filename | hash |

+-----------------------------+----------------+------------------------------------------+

| /opt/appdynamics-sdk-native | env.sh | fcd22cc86a46406ead333b1e92937f02c262406a |

| /opt/appdynamics-sdk-native | install.sh | 03f39f84664a22413b1a95cb1752e184539187cb |

| /opt/appdynamics-sdk-native | runSDKProxy.sh | fb37a2ef8b28dbb20b9265fe503c5e966e2c5544 |

| /opt/appdynamics-sdk-native | startup.sh | 90db0cac34b1a9c74cf7e402ae3da1d69975f87d |

+-----------------------------+----------------+------------------------------------------+

Conclusions

I hope you've enjoyed this blog series! We had a lot of fun building the infrastructure, finding credentials to some incredibly scary things (scary), and ofcourse documenting our journey.

The main point we intend to illustrate is that it is very easy for an otherwise well-funded, careful organisation to leak secrets via DockerHub. These are not personal containers with little value, but those operated by large organisations who are for the most part, proactive in securing their information.

But in today's reality - this is a great example of today's shifting attack surface. Securing a modern organisation requires examination of a wide and varied attack surface, showing the need for organisations to proactively consider what their attack surface may have truly evolved into.

At watchTowr, we believe continuous security testing is the future, enabling the rapid identification of holistic high-impact vulnerabilities that affect your organisation.

If you'd like to learn more about the watchTowr Platform, our Continuous Automated Red Teaming and Attack Surface Management solution, please get in touch.

At watchTowr, we passionately believe that continuous security testing is the future and that rapid reaction to emerging threats single-handedly prevents inevitable breaches.

With the watchTowr Platform, we deliver this capability to our clients every single day - it is our job to understand how emerging threats, vulnerabilities, and TTPs could impact their organizations, with precision.

If you'd like to learn more about the watchTowr Platform, our Attack Surface Management and Continuous Automated Red Teaming solution, please get in touch.